That was the topic of a discussion hosted by Fasoo at the 2023 Gartner Security and Risk Management Summit.

Fasoo CTO and COO Ron Arden spoke with Tad Mielnicki, Co-Founder and COO of Overwatch Data, and Jamie Holcombe, CIO of the US Patent and Trademark Office, about the challenges of using generative AI responsibly.

In Part 1 of this conversation, we focused on the potential risks of using generative AI and some measures to mitigate them.

What advice does our panel have for organizations as they try to manage the risks of using AI while enjoying the benefits? Find out in Part 2 of the conversation:

Ron Arden: If we went back six or seven months ago and started thinking about the post-COVID world and cybersecurity, everybody’s had to deal with the same thing. A hybrid workplace, because people are everywhere doing whatever. And a lot of organizations are trying to implement zero-trust platforms because we need to start thinking about our data in a different way. There’s no such thing as a walled garden anymore. We really need to think about how we explicitly trust human beings and machines to decide how we access data.

And then suddenly, this thing called ChatGPT came along.

Now, AI is not brand new, but all of a sudden, this thing went from nothing to 100 million people around the planet beginning to use it. We need to start thinking about how are we actually going to use this and what are some of the issues around it.

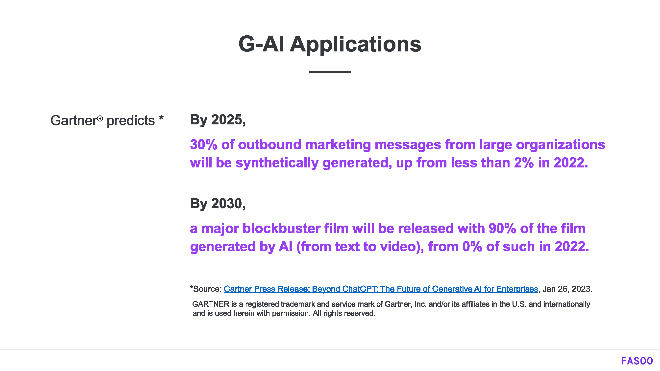

Gartner has a couple of interesting predictions. In a couple of years, there are going to be a lot of companies using AI or generative AI specifically to generate outbound marketing messages. And a few more years down the road, we’ll see a major blockbuster film where the vast majority of it is produced by AI.

If any of you have watched some of the interesting things online, go to YouTube and you can see all the variants of Wes Anderson films. Those things are all generated by AI engines, so we’re probably going to get to that stuff a little bit faster. So, you want to start thinking about how are you going to use this stuff in the workplace.

There are a lot of public large language models out there. ChatGPT has all the buzz right now. Google, Microsoft, and everybody’s coming up with their own. Tad’s company has some in his organization. But I think what you’re going to wind up seeing is sort of this combination. People are going to start using public LLMs, but they’re also going to create their own. Because whether you’re in a law firm or manufacturing company or finance, you want to start targeting your own types of language models and AI to start looking at the data that you’ve got and figuring out the appropriate way to use that.

An area where a lot of companies are already using this is in any kind of service environment. If you go online and you start chatting, you’re probably chatting with either an AI bot or an AI-enhanced bot. So again, this stuff is here, but the question is, how do we use this stuff safely?

You want to start looking at some of the risks of AI.

Let’s say I’m a user, and I want to figure out what’s a good commencement address that I, as the president of a university, am going to give. And the reason I said that is I was at my niece’s graduation a couple of weeks ago and the university president said the first part of this speech was written by ChatGPT. She said it was very, very general.

But the problem is that people are starting to put proprietary or very sensitive data into these models, which then become part of the model. And now AI may or may not give us good information. It could have hallucinations, which are completely wrong. And now we have this feedback loop where I have a lot of risk in the environment. So, one area that a lot of attention has been placed on is who owns what when an AI is involved.

Back to those YouTube videos, I was talking about. Some of these things are now actually attributing the authorship to AI. But the question is, if I’m an author and I put content up into a public LLM and the AI generates something, what happens?

Who owns it? Do I own it? Does the AI own it? Does the author of the tool own it?

Becomes a little bit complicated. So, one of the things we started thinking about was how will organizations police this. And I think legislation is probably going to have to start appearing.

Ron Arden: What are some examples of potential risks or vulnerabilities that arise from the use of generative AI and what measures can be taken to mitigate them?

Jamie Holcombe: Well, I’m glad you started out with the whole thing about public versus private because it’s very important, who owns what. So, the US Patent and Trademark Office has an obligation to ensure that the public knows all of those patents and trademarks, right? I mean, we have to get that out. That’s public information.

But when you submit a patent, that’s when we have to keep it private. And so, there is a real risk in using any public-based ChatGPT, Copilot, or anything like that. The fact that you can put in private information, that becomes part of the model, and because of that, you’re actually violating the law. We’re not able to give claims over to anything outside of our walls. And so, I’ve had to put a prohibitive use on it for all of our examiners. You can’t do that. It’s against the law: Title 135, section 122. Look it up. It’s pretty cool.

Anyway, you were talking before about copyright, and just to make sure everybody knows four areas of intellectual property.

One, patents.

Two, trademarks. Easy, right?

Three is copyright. It’s not even in the same branch of government. It’s over there in the legislative branch at the Library of Congress. Although we do work very closely and we do know the registrar, she actually came from the patent office. So, we’re tightly collaborating, and it’s a good thing.

And finally, the fourth piece of intellectual property is trade law. And trade law has to do with company secrets. And you don’t have to patent company secrets. You can keep them, right? Coca-Cola’s recipe, et cetera. But when people violate those trade laws, what you need to do is get the FBI involved, because intellectual property is only as good as you’re willing to defend it in court. Whoa.

You don’t own the results of using ChatGPT or other LLMs

Okay. So how does that deal with the public use of ChatGPT or other types of generative AI? And the fact is, believe it or not, you don’t even own the results of those artificial intelligence queries, because they’re used to then feed back into the loop to supposedly train it better. So, you have to develop your own if you’re talking about private information.

Tad Mielnicki: I’m going to piggyback on what you said. Because what I think is going to be really challenging from a patent and a copyright perspective is whether you’re utilizing a public LLM or you are privatizing your own LLM, within your organization. They’re reliant on the two-way transaction between the user taking from the model and getting things answered for them, but then also contributing back. And so how we govern this is going to be really interesting because even if you’re running one inside your own private environment, you need information and data to feed it for it to become a better version of itself.

And so where does that line between public and private go? And I’m glad we don’t have to figure that out. I’m a little scared that the people figuring it out probably don’t understand it either in the legislature. So, we’re going to have to do a really good job of educating. But from a risk perspective, I think, going back to your original question, the hallucination problem, I think, is something that people don’t understand enough about. And we’re starting to see examples in the news where this is getting more and more problematic.

You need to understand the limitations of AI tools or you could get into trouble

Recently, there are two attorneys in New York who wrote their entire case brief utilizing ChatGPT. ChatGPT invented case law to back up their argument for them and now they are potentially going to be disbarred. And so, this is a phenomenal example of if you start to utilize these tools without understanding the limitations on the tools, without understanding what they really are, there’s going to be this unverified over-reliance on something that you don’t understand and it’s going to get you in trouble. The trouble with not understanding where the security risks are going to come from.

Jamie Holcombe: Amen. And I tell you a thing about that, I’m not the first one to describe this like the nuclear age. When we were first understanding radioactivity and how to use it, how to split the atom, and so forth, there was a lot of fear involved, but sometimes there wasn’t enough respect. So, it’s a balance between fear and respect that we actually have to have the discipline and the knowledge to understand these things.

There is a balance between fear and respect for the technology

The biggest things about ChatGPT are understanding where the data sources are and what algorithms are used. And the difference between ChatGPT 3.0 and ChatGPT 4.0, is one is open, one is a commercial use, and in one sense they tell you everything. In 4.0 they do not tell you anything. They’re not required to.

Back in the nuclear age, the Radium Girls took radioactive paint and were painting the dials of watches so they could glow in the dark. They also used radioactive lipstick and nail polish. Those ladies died from the radioactivity. So, the fact is that I’m not saying generative AI is going to kill you, but you’ve got to respect it at least to make sure that you know where the data sources are, and you know the discipline used and the algorithms and how you’re actually training the models.

Tad Mielnicki: People feared the printing press at one point, right? So, to not get overly weaponized on AI, because I think there are a lot of concerns there. But if you think about how you’re going to utilize these models from the onset, they’ve basically given everybody an opportunity to more accurately and more efficiently utilize data. And so, we are sort of democratizing data science. The offshoots of that are going to be really interesting to see over the next few years and corruption will exist. But that happens with any new technology. Social media is great until it’s not. It’s not, right? The Internet was great until people started putting up forums where you could buy and sell drugs and guns online. And now there are all these concerns. So, it’s a balancing act that comes with education.

*

Do the scenarios mentioned in this conversation sound familiar? Most organizations are struggling with the same questions about generative AI.

Join us for part 2 of this conversation.

###

The transcript of this conversation has been shortened and edited for clarity and the blog format.